Category: Linux

-

NetworkManager exit status 1

Recently reinstalled NextDNS on a RPi4 64bit and came across this error: It seems like NextDNS was actually running, but just throwing an error when running nextdns activate. Restarting did seem to work without throwing any error. The logs showed the same error: The solution was (as root): Looks like, instead of resolvconf, openresolv was…

-

Mastodon server: email

Always a hassle to get mail delivery to work. Had a similar problem with a VoIP (Nexmo SMS/call forwarding) tool that just refused to work using local mail servers without a valid cert. Gave up and started using Mailgun. Long story short: use something like Mailgun or another provider. Using localhost SMTP server support seems…

-

Feed2Toot

Started looking into a service to auto-post from this blog onto my Mastodon feed. Feed2Toot fit the bill perfectly. I wanted to run the whole thing from a Docker container, though, so I’ll quickly write a how-to. This whole thing runs from a Raspberry Pi, as root. No k8s or k3s for me. The path…

-

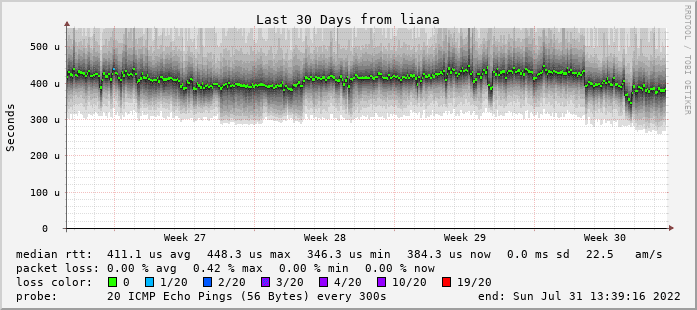

Smokeping.eu

I’ve revamped my Smokeping infra a bit since 2020. First off, starting to use the smokeping.eu1 domain that Bianco got 10 or so years ago instead of using weird URLs under superuser.one domain. It’s running on four nodes as we speak: a virtual machine on a colocation server in Leaseweb, Amsterdam, NL -> leaseweb.nl.smokeping.eu a…

-

Remote desktop and Wake-on-LAN

Shan uses her iPad a lot, but a lot of the more serious (interior design) work needs to happen on AutoCAD or Photoshop. That is just not going to work on an iPad. When we’re travelling (read: holiday) she’s carrying an old Lenovo ThinkPad 13 (great device!) just “in case” she needs to open AutoCAD…

-

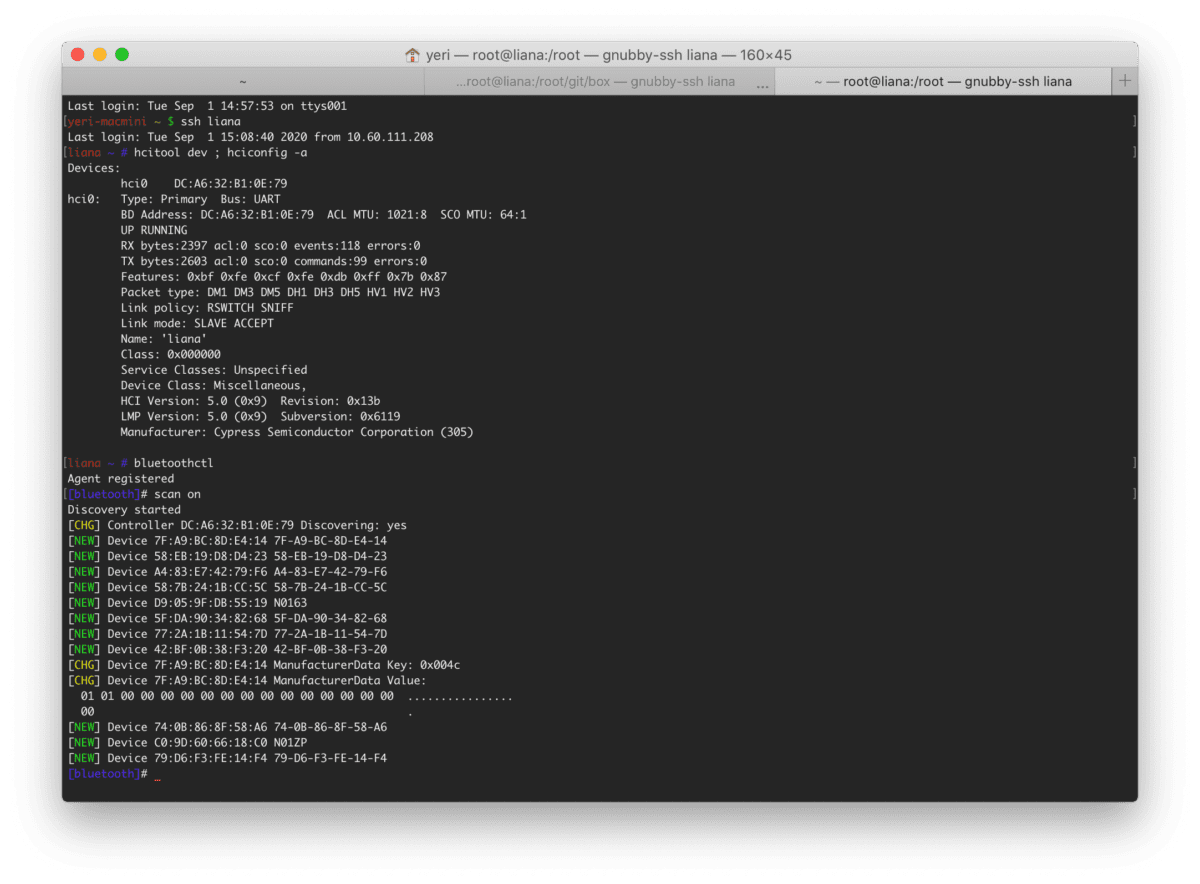

Making Bluetooth work on RPi4

I rarely use Bluetooth on my RPis. I’m already facing enough issues with my iMac and Mac Mini (it lags, it randomly disconnects in meetings, etc). My pwnagotchi on the other hand is counting on a BLE network to connect to the internet: for now I am using my iPad, and while that works, it…

-

Ideal travel router: GL-AR750S

Right. With the pandemic and all none of us are going to travel much but still… About a year ago I purchased myself an OpenWRT router to use on the plane and in hotels. And so far I really like both the device and the Hong Kong based brand (launching new and updated products, and…

-

Raspberry Pi 4 + SSD

All right. With the release of the new RPi4 with 8Gb of RAM I had to get myself one to see if it was already a viable desktop replacement for surfing and emails. While a SD card works fine for certain tasks (things that don’t require a lot of IO) — for a desktop that’s…

-

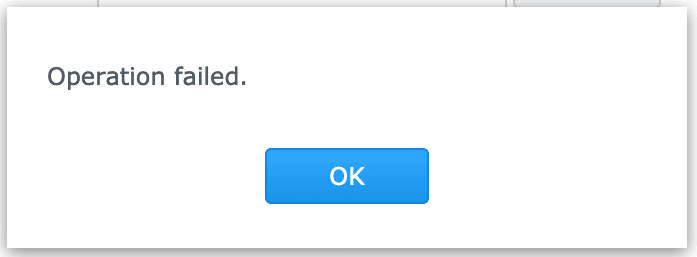

Synology “Operation Failed” manually updating package

Resilio Sync released an update last week and on Synology these package don’t auto update. Time to manually update the packages again. On my DS1515 (more RAM, more CPU) the manual update goes by fine (stop service, manual update, browse for file, upload, start service) but my DS216j, not so much. Attempting to upload the…