Tag: fediverse

-

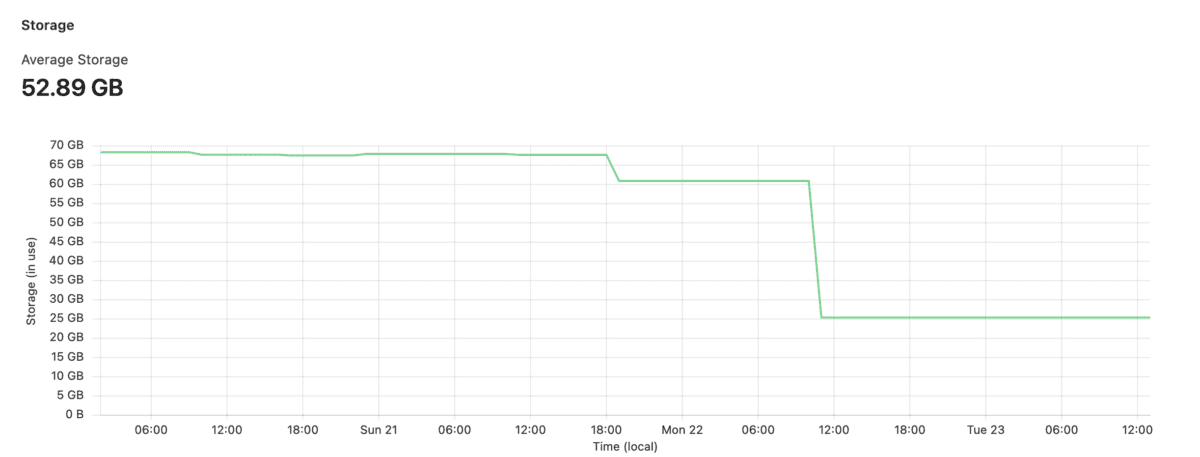

Keeping Mastodon storage in check

For my Mastodon instance, I use Cloudflare R2; mainly for two reasons: While I didn’t care much about storage any more, I still wanted to make sure it was kept in check, also for two reasons: I run my Mastodon in a Docker instance, so your commands may vary (basically tootctl X Y is what…

-

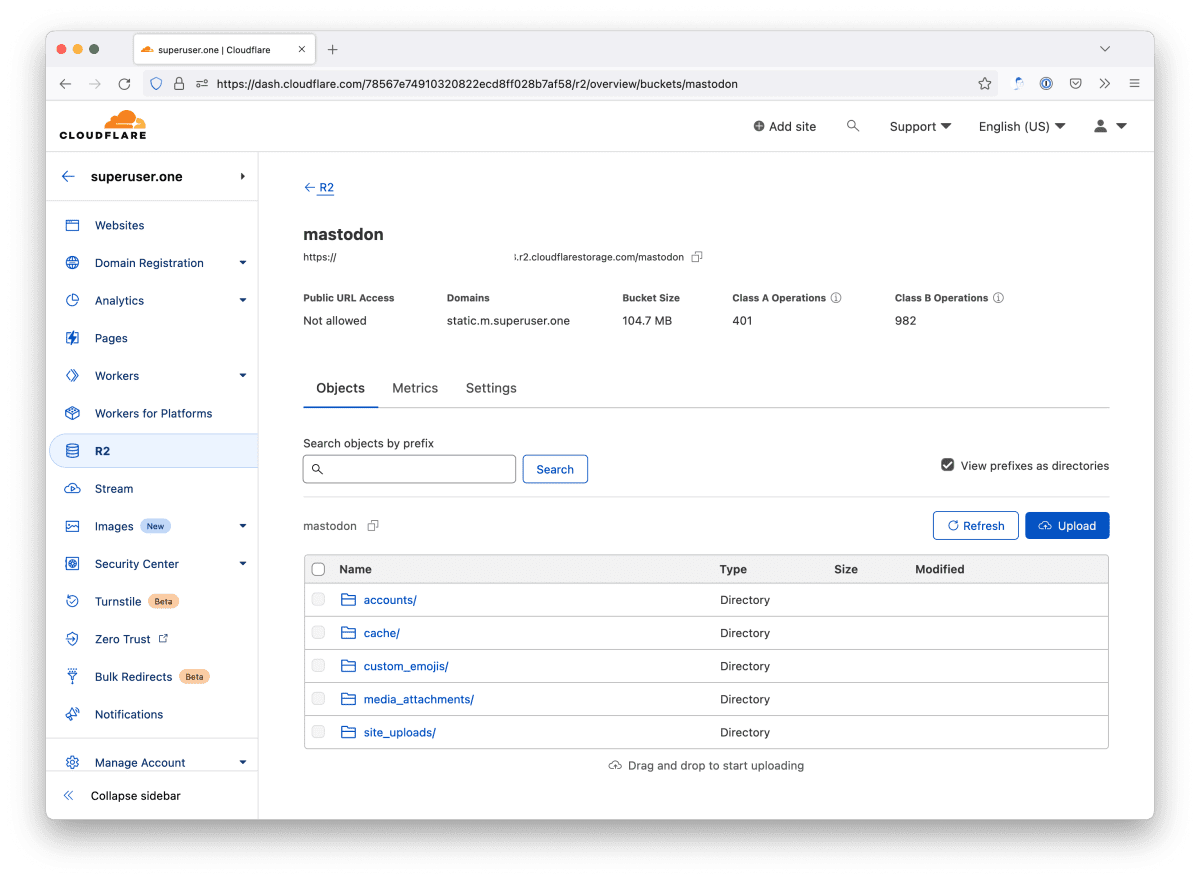

Mastodon server: R2

This is a very short post because to be honest, I didn’t figure much out myself. My uploads/static files are now saved in R2 under its own URL (part of my enterprise zone) so that my normal caching rules and other settings are applied. Add these to your application.env file: The token/API key is a…

-

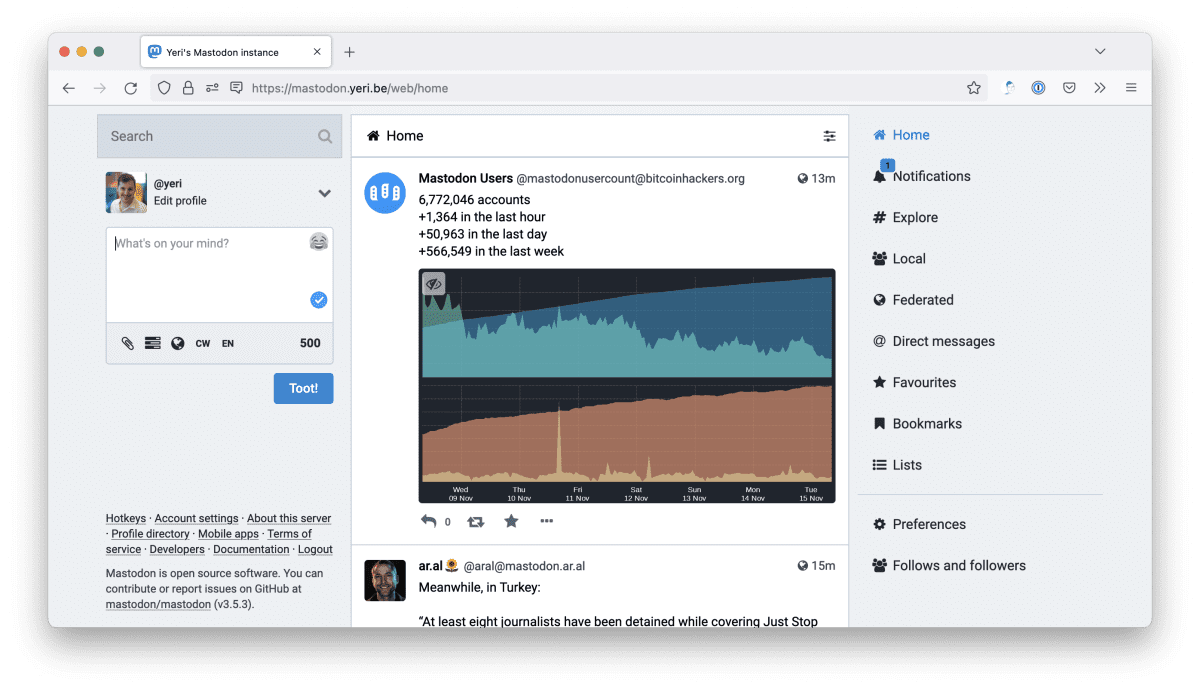

Using Mastodon with Cloudflare

If you’re using Mastodon with Cloudflare CDN/protection and minify turned on, you’ll notice the site may look broken (after a few visits, when hitting Cloudflare cache). And you’ll notice errors in the webdev tools similar to Failed to find a valid digest in the ‘integrity’ attribute, with computed SHA-256 integrity: Basically, the sha256 hash doesn’t…

-

Mastodon server: email

Always a hassle to get mail delivery to work. Had a similar problem with a VoIP (Nexmo SMS/call forwarding) tool that just refused to work using local mail servers without a valid cert. Gave up and started using Mailgun. Long story short: use something like Mailgun or another provider. Using localhost SMTP server support seems…

-

Feed2Toot

Started looking into a service to auto-post from this blog onto my Mastodon feed. Feed2Toot fit the bill perfectly. I wanted to run the whole thing from a Docker container, though, so I’ll quickly write a how-to. This whole thing runs from a Raspberry Pi, as root. No k8s or k3s for me. The path…